Introduction

The 2020 elections in US are around the corner. Fake News published on social media is a HUGE problem around the election time. While some of the Fake News is produced purposefully for skewing election results or to make a quick buck through advertisement, false information can also be shared by misinformed individuals in their social media posts. These posts go viral in the inter connected world of social media and people start assuming popular stories are indeed true. NY Times wrote a very good article on how fake news goes viral.

Detecting Fake News is not an easy task for a machine learning model. Many of these stories are very well written. Still machine learning can help because:

- It can detect the writing style as being similar to what has been marked as fake in its data base

- The version of events in the story conflicts with what is known as true

In this blog we build a fake news detector using BERT and T5 Transformer models. The T5 model does quite well with an impressive 80% accuracy in detecting actual fake news published around 2016 elections.

The code for the “Fake News Detector” is made public on my Github here.

I run a machine learning consulting, Deep Learning Analytics. At Deep Learning Analytics, we are very passionate about using data science and machine learning to solve real world problems. Please reach out to us if you are looking for NLP expertise for your business projects.

Data Set

For this blog, we have used the Kaggle data set — Getting Real about Fake News. It contains news and stories marked as fake or biased by the BS Detector, a Chrome Extension by Daniel Sieradski. The BS detector labels some websites as fake and then any news scraped from them is marked as fake news.

The data set contains about 1500 news stories of which about 1000 are fake and 500 are real. What I like about this data set is that it has stories captured in the final days of 2016 election which makes it very relevant to detecting fake news around election time. However one of the challenges in labelling fake news is that time and effort needs to be spent to whet the story and its correctness. The approach taken here is to consider all stories from websites marked as BS to be fake. This may not always be the case.

An example of a story marked as real is:

ed state \nfox news sunday reported this morning that anthony weiner is cooperating with the fbi which has reopened yes lefties reopened the investigation into hillary clintons classified emails watch as chris wallace reports the breaking news during the panel segment near the end of the show \nand the news is breaking while were on the air our colleague bret baier has just sent us an email saying he has two sources who say that anthony weiner who also had coownership of that laptop with his estranged wife huma abedin is cooperating with the fbi investigation had given them the laptop so therefore they didnt need a warrant to get in to see the contents of said laptop pretty interesting development \ntargets of federal investigations will often cooperate hoping that they will get consideration from a judge at sentencing given weiners wellknown penchant for lying its hard to believe that a prosecutor would give weiner a deal based on an agreement to testify unless his testimony were very strongly corroborated by hard evidence but cooperation can take many forms and as wallace indicated on this mornings show one of those forms could be signing a consent form to allow the contents of devices that they could probably get a warrant for anyway well see if weiners cooperation extends beyond that more related

And a fake story is:

for those who are too young or too unwilling to remember a trip down memory lane \n debut hillary speaks at wellesley graduation insults edward brooke senates lone black member \n watergate committee says chief counsel jerry zeifman of hillarys performance she was a liar she was an unethical dishonest lawyer she conspired to violate the constitution the rules of the house the rules of the committee and the rules of confidentiality \n cattlegate as wife of arkansas governor she invests in cattle futures makes \n whitewater clintons borrow money to launch whitewater development corporations several people go to prison over it clintons dont \n bimbo eruptions bill and hillary swear to steve kroft on minutes bill had nothing to do with gennifer flowers \n private investigators we reached out to them hillary tells cbs steve kroft of bills women i met with two of them to reassure them they were friends of ours they also hire pis to bribe andor threaten as many as twodozen of them \n health care reform hillary heads secret healthcare task force sued successfully for violating open meeting laws subsequent plan killed by democraticcontrolled house \n ....

The stories have on an average 311 words with some stories being more than 1000 words long.

Model Training

For this exercise, we trained two models from HuggingFace — 1. BERT with Sequence Classification head and second a T5 Transformer model with conditional generation head. The BERT model got an accuracy of 75% in detecting fake news on the validation set whereas the T5 model go to 80%. So we will focus the rest of the blog on how a T5 model can be trained.

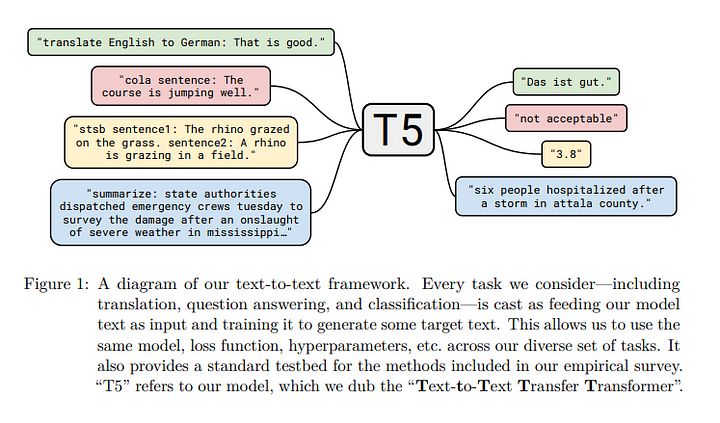

T5 is a text to text model meaning it can be trained to go from input text of one format to output text of one format. This makes this model very versatile. I have personally used it to train for text summarization. Check my blog here. And also used it to build a trivia bot which can retrieve answers from memory without any provided context. Check this blog here.

Changing our classification problem to text to text format

Huggingface T5 implementation contains a Conditional Generation head that can be used for any task. For our task, our input is the actual news story and output is text — Real/Fake.

For the input text we set the max token length to 512. If stories are smaller than 512 then a <PAD> token will be added to the end. If stories are larger then they will get truncated. For the output the token length is set as 3. Code snippet for this is below. Please find the full code on my Github here.

source = self.tokenizer.batch_encode_plus([input_], max_length=self.input_length,

padding='max_length', truncation=True, return_tensors="pt")

targets = self.tokenizer.batch_encode_plus([target_], max_length=3,

padding='max_length', truncation=True, return_tensors="pt")

Defining the T5 Model Class

Next we define the T5 model fine tuner class. The model forward pass is same as other Transformer models. Since T5 is a text-text model, both the input and targets tokenized as well as their attention mask is passed to the model.

def forward(self, input_ids, attention_mask=None, decoder_input_ids=None, decoder_attention_mask=None, lm_labels=None):

return self.model(

input_ids,

attention_mask=attention_mask,

decoder_input_ids=decoder_input_ids,

decoder_attention_mask=decoder_attention_mask,

labels=lm_labels

)

In the generation step, the output of the decoder is limited to a token length of 3 as shown below:

def _generative_step(self, batch) :

t0 = time.time()

# print(batch)

inp_ids = batch["source_ids"]

generated_ids = self.model.generate(

batch["source_ids"],

attention_mask=batch["source_mask"],

use_cache=True,

decoder_attention_mask=batch['target_mask'],

max_length=3

)

preds = self.ids_to_clean_text(generated_ids)

target = self.ids_to_clean_text(batch["target_ids"]

The model measures accuracy score by checking if the generated label — fake/real matches the actual label or not.

Model Training and Results

T5 small was trained for 30 epochs using a batch size of 8. The model took about an hour to train. Weights and Biases was used to monitor training. The online Github code has wandb integrated into Pytorch Lightning for training.

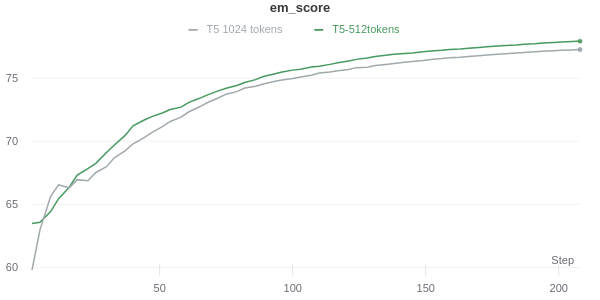

I trained a T5 small on token length of 512 and 1024. Both the models performed similar and got to an accuracy score of close to 80%

Testing out the T5 on the val set shows that model has an impressive ability to detect fake news.

Input Text: classify: russias most potent weapon hoarding gold shtfplancom this article was written by jay syrmopoulos and originally published at the free thought project editors comment he who holds the gold makes the rules fresh attempts at containing russia and continuing the empire have been met with countermoves russia appears to be building strength in every way putin and his country have no intention of being under the american thumb and are developing rapid resistance as the us petrodollar loses its grip and china russia and the east shift into new currencies and shifting world order what lies ahead it will be a strong hand for the countries that have the most significant backing in gold and hard assets and china and russia have positioned themselves very well prepare for a changing economic landscape and one in which selfreliance might be all we have russia is hoarding gold at an alarming rate the next world war will be fought with currencies by jay syrmopoulos with all eyes on russias unveiling their latest nuclear intercontinental ballistic missile icbm which nato has dubbed the satan missile as tensions with the us increase moscows most potent weapon may be something drastically different the rapidly evolving geopolitical weapon brandished by russia is an ever increasing stockpile of gold as well as russias native currency the ruble take a look at the symbol below as it could soon come to change the entire hierarchy of the international order potentially ushering in a complete international paradigm shift... Actual Class: Fake Predicted Class from T5: Fake

Conclusion

T5 is an awesome model. It has made it easy to fine tune a Transformer for any NLP problem with sufficient data. This blog shows that T5 can do an impressive job of detecting fake news.

I hope you give the code a try and train your own models. Please share your experience in the comments below.

At Deep Learning Analytics, we are extremely passionate about using Machine Learning to solve real-world problems. We have helped many businesses deploy innovative AI-based solutions. Contact us through our website here if you see an opportunity to collaborate.

References

- T5 Transformer

- Huggingface Transformers

- Real vs Fake News