Introduction

Most of the world is currently affected by the COVID-19 pandemic. For many of us this has meant quarantine at home, social distancing, disruptions in our work enviroment. I am very passionate about using data science and machine learning to solve problems. Please reach out to me through here if you are a Health Services company and looking for data science help in fighting this crisis.

Media outlets around the world are constantly covering the pandemic — latest stats, guidelines from your government, tips for keeping yourself safe etc. It can quickly get overwhelming to sort through all the information out there.

In this blog I want to share how you can use BERT based question answering to extract information from news articles about the virus. I provide code tips on how to set this up on your own, as well as share where this approach works and when it tends to fail. My code is also uploaded to Github.

Original full story published on my website here.

What is Question Answering?

Question Answering is a field of Computer Science within the umbrella of Natural Language Processing, which involves building systems which are capable of answering questions from a given piece of text. It has been a very active area of research for the past 2–3 years. The initial systems of Question Answering were mainly rule-based and only restricted to specific domains , but now, with the availability of better computing resources and deep learning architectures we are getting to models that have generalized question answering capabilities.

Most of the best performing Question Answering models are now based on Transformers Architecture. To learn more about transformers, I recommed this youtube video. Transformers are encoder-decoder based architectures which treat Question Answer problem as a Text generation problem ; it takes the context and question as the trigger and tries to generate the answer from the paragraph. BERT, ALBERT, XLNET and Roberta are all commonly used Question Answering models.

Squad — v1 and v2 data sets

The Stanford Question Answering Dataset(SQuAD) is a dataset for training and evaluation of the Question Answering task. SQuAD now has released two versions — v1 and v2. The main difference between the two datasets is that SQuAD v2 also considers samples where the questions have no answer in the given paragraph. When a model is trained on SQuAD v1, the model will return an answer even if one doesn’t exist. To some extent you can use the probability of the answer to filter out unlikely answers but this doesn’t always work. On the other hand, training on SQuAD v2 dataset is a challenging task requiring careful monitoring of precision and hyper parameter tuning.

Building and testing SQuAD BERT model

With the outbreak of this virus worldwide, there has been a plethora of news articles providing facts, events and other news about the virus. We test how question answering works on articles that the model has never seen before.

To showcase this capability, we have chosen a few informative articles from CNN:

The transformers repository from huggingface is an amazing github repo where they have compiled multiple transformer based training and inference pipelines. For this question answering task, we will download a pre-trained squad model from https://huggingface.co/models. The model we will use for this article is bert-large-uncased-whole-word-masking-finetuned-squad. As apparent from the model name, the model is trained on the large bert model with uncased vocabulary and masking the whole word. There are three main files we need for using the model:

- Bert-large-uncased-whole-word-masking-finetuned-squad-config.json: This file is the configuration file which has parameters that the code will use to do the inference.

- Bert-large-uncased-whole-word-masking-finetuned-squad-pytorch_model.bin: This file is the actual model file which has all the weights of the model.

- bert-large-uncased-whole-word-masking-finetuned-squad-tf_model.h5:This file has the vocabulary model used to train the file.

We can load the model we have downloaded using the following commands:

tokenizer = AutoTokenizer.from_pretrained(‘bert-large-uncased-whole-word-masking-finetuned-squad’, do_lower_case=True)

model = AutoModelForQuestionAnswering.from_pretrained(“bert-large-uncased-whole-word-masking-finetuned-squad”)

Overall, we have structured our code in two files. Please find the following on my Github:

- question_answering_inference.py

- question_answering_main.py

The question_answering_inference.py file is a wrapper file which has supporting functions to process the input and outputs. In this file, we load the text from the file path, clean the text (remove the stop words after converting all words to lowercase), convert the text into paragraphs and then pass it on to the answer_prediction function in the question_answering_main.py. The answer_prediction function returns the answers and their probabilities. Then, we filter the answers based on a probability threshold and then display the answers with their probability.

The question_answering_main.py file is the main file which has all the functions required to use the pre-trained model and tokenizer to predict the answer given the paragraphs and questions.

The main driver function in this file is the answer_prediction function which loads the model and tokenizer files, calls functions to convert paragraphs to text, segment the text, convert features to the relevant objects, convert the objects into batches and then predict the answer with the probabilities.

In order to run the script, run the following command:

python question_answering_inference.py — ques ‘How many confirmed cases are in Mexico?’ — source ‘sample2.txt’

The program takes the following parameters:

- ques : Question parameter which expects a question enclosed in single(or double) quotes.

- source: This parameter has the source path of the text file containing the text/article

Model Outputs

We test this model on several articles of coronavirus. :

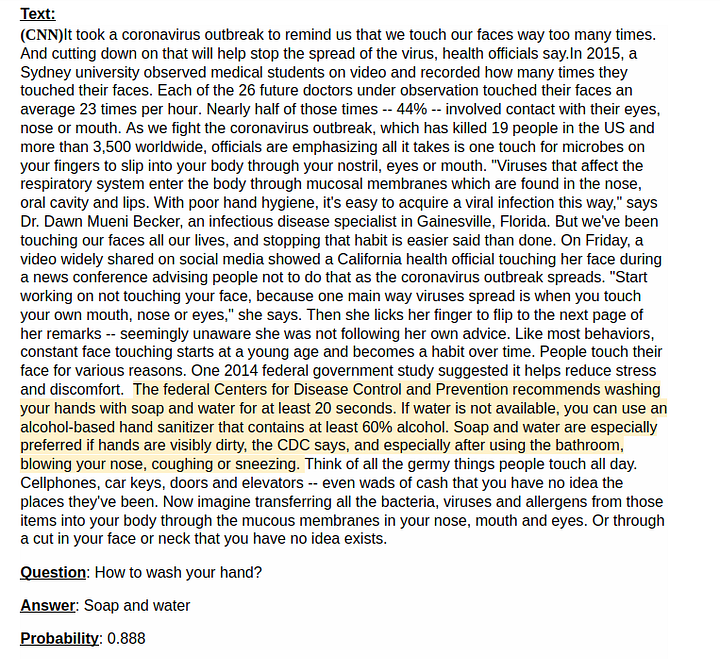

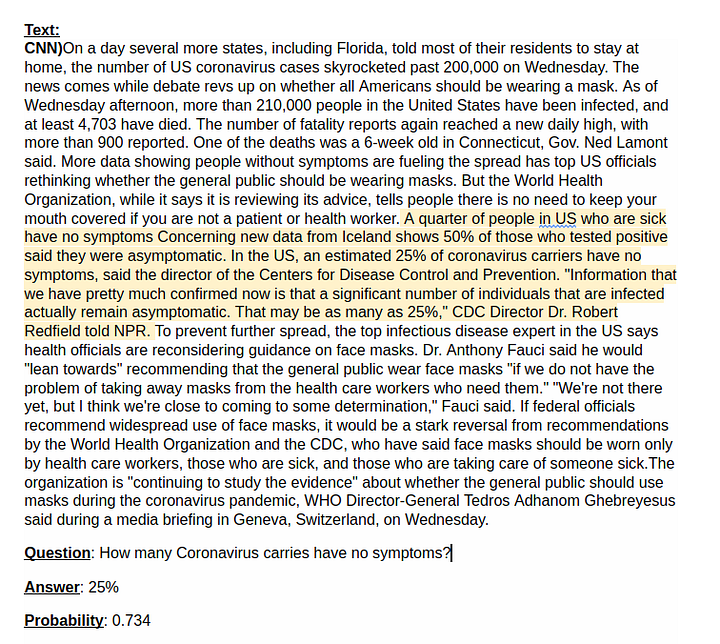

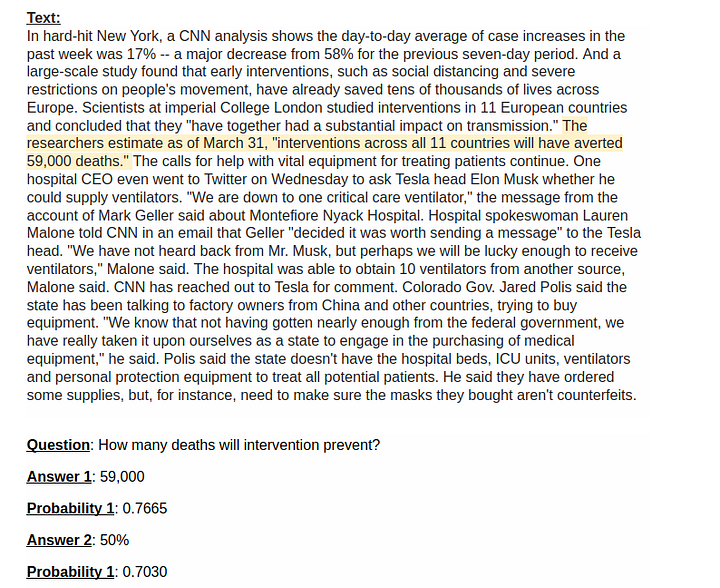

In the following examples, we have the text and the question passed to the model and the answer and its probability that was returned.

The above answer is correct. The model is able to pick the right answer with high confidence.

This is a trickier question as there are several stats shared in the article. One study from Iceland shows about 50% of carriers are asymptomatic whereas the CDC estimates 25%. Its amazing to see the model is able to pick and return the correct answer.

The same article later talks about the effect of interventions and how many deaths it can avert. The relevant text is copied below. However when running the code we pass the full article not the subset below. Since the SQUAD model has limitation on how much text can be processed, we pass the full text in multiple loops. As you see below this can mean that the model returns several answers. Here the first answer with the highest probability is the correct one.

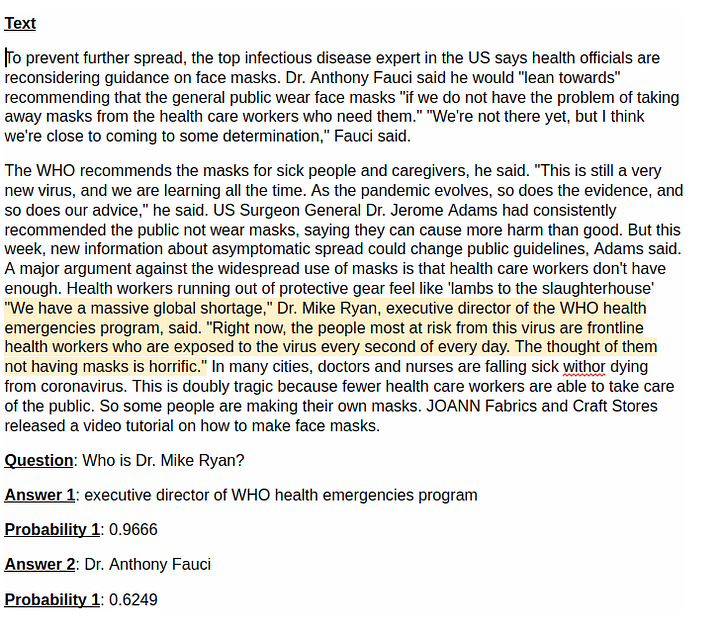

Another example where this happens is shared below. Here the correct answer is that Mike Ryan is the director of WHO health emergencies program. The model is able to pick that up with a probability of 96%. However it does return Dr. Fauci as a low probability answer. Here as well, we can use the probability score to get to the correct answer.

The above examples show how powerful SQuAD question answering can be to get answers from very long news texts. One other amazing learning here is how powerful models trained on SQuAD are. They are able to generalize very well on texts never seen before.

All said there are still a few challenges in this approach working perfectly in all situations.

Challenges

Few challenges with using Squad v1 for Question Answering are:

1) Many times , the model will provide an answer with high enough probability even if the answer doesn’t exist in the text, which leads to false positives.

2) While in many cases answers can be ranked by probability correctly, this is not always bullet proof. We have observed cases where the incorrect answer has similar or higher probability than the correct answer.

3) The model seems sensitive to punctuation in the input text. While feeding the input to the model, the text has to be clean(no junk words, symbols,etc), otherwise it may lead to wrong results.

The first two challenges can be resolved to some extent using a SQuAD v2 model instead of SQuAD v1. The SQuAD v2 dataset has samples in the training set where there are no answers for the question asked. This allows the model to better judge when no answer should be returned. We have experience using SQuAD v2 models. Please contact me through my website if you are interested in applying this to your use case.

Conclusion

In this article, we saw how Question Answering can be easily implemented to build systems capable of finding information from long news articles. I encourage you to give this a shot for yourself, and learn something new while you are in quarantine.

I am extremely passionate about NLP, Transformers and deep learning in general. I have my own deep learning consultancy and love to work on interesting problems. I have helped many startups deploy innovative AI based solutions. Check us out at — https://deeplearninganalytics.org/.

Original full article published on Medium.

References

- Transformer Model Paper: https://arxiv.org/abs/1706.03762

- BERT Model Explained: https://towardsdatascience.com/bert-explained-state-of-the-art-language-model-for-nlp-f8b21a9b6270

- Hugging Face